Amit Joshi

- Data Engineering

- Airflow Workflow

- Data Engineering

- Airflow Python

- Airflow

- Data Scraping

- Apache Airflow

Airflow tutorial 1: Introduction to Apache Airflow

28 August: Amit Joshi

The goal of this blog is to answer these two questions

- What is Airflow

- Use case&why do we need Airflow?

What is Airflow

Airflow is a platform to programmatically author, schedule and monitor workflows and data pipelines.

Use Airflow to author workflows as Directed Acyclic Graphs (DAGs) of tasks. The Airflow scheduler executes your tasks on an array of workers while following the specified dependencies. Rich command line utilities make performing complex surgeries on DAGs a snap. The rich user interface makes it easy to visualize pipelines running in production, monitor progress, and troubleshoot issues when needed.

When workflows are defined as code, they become more maintainable, versionable, testable, and collaborative.

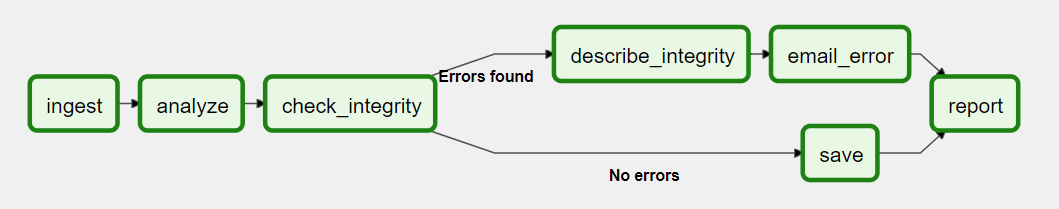

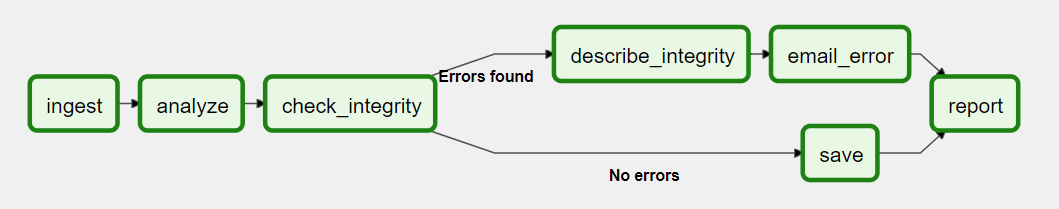

A typical workflow

- Download data from source

- Send data somewhere else to process

- Monitor when the process is completed

- Get the result and generate the result

- Send the report out by email

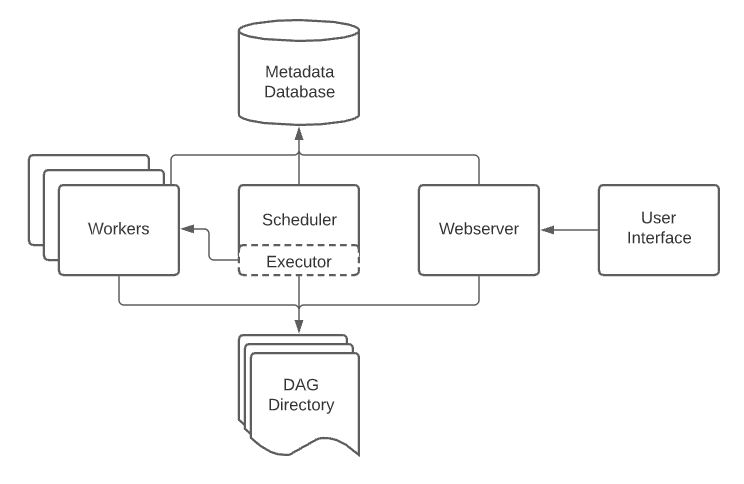

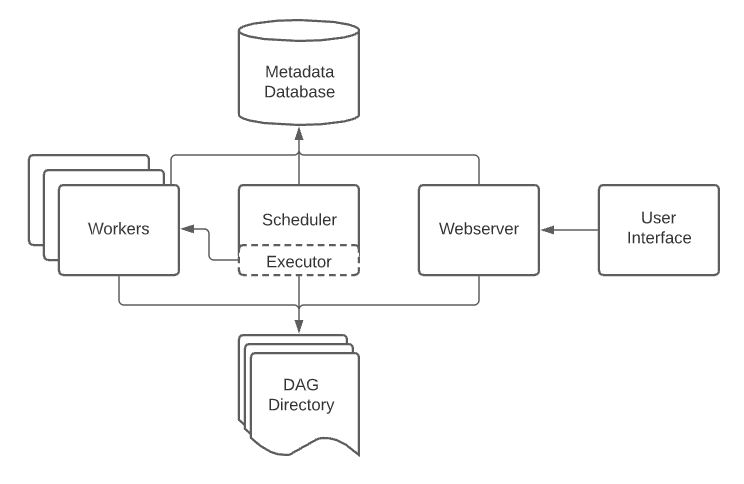

Airflow Architecture

Airflow is a platform that lets you build and run workflows. A workflow is represented as a DAG (a Directed Acyclic Graph), and contains individual pieces of work called Tasks, arranged with dependencies and data flows taken into account.

A DAG specifies the dependencies between Tasks, and the order in which to execute them and run retries; the Tasks themselves describe what to do, be it fetching data, running analysis, triggering other systems, or more.

An Airflow installation generally consists of the following components:

- A scheduler, which handles both triggering scheduled workflows, and submitting Tasks to the executor to run.

- An executor, which handles running tasks. In the default Airflow installation, this runs everything inside the scheduler, but most production-suitable executors actually push task execution out to workers.

- A webserver, which presents a handy user interface to inspect, trigger and debug the behaviour of DAGs and tasks.

- A folder of DAG files, read by the scheduler and executor (and any workers the executor has)

- A metadata database, used by the scheduler, executor and webserver to store state.

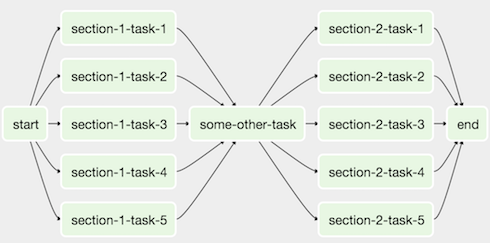

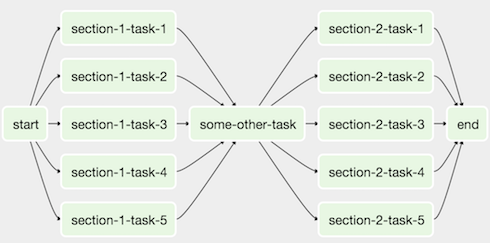

Airflow DAG

- A workflow is a Directed Acyclic Graph(DAG) with multiple tasks which can be executed independently.

- Airflow DAGs are composed of Task.

Control Flow

DAGs are designed to be run many times, and multiple runs of them can happen in parallel. DAGs are parameterized, always including a date they are "running for" (the execution_date), but with other optional parameters as well.

Tasks have dependencies declared on each other. You'll see this in a DAG either using the >> and << operators:

first_task >> [second_task, third_task] third_task << fourth_task

Or, with the set_upstream and set_downstream methods:

first_task.set_downstream([second_task, third_task]) third_task.set_upstream(fourth_task)

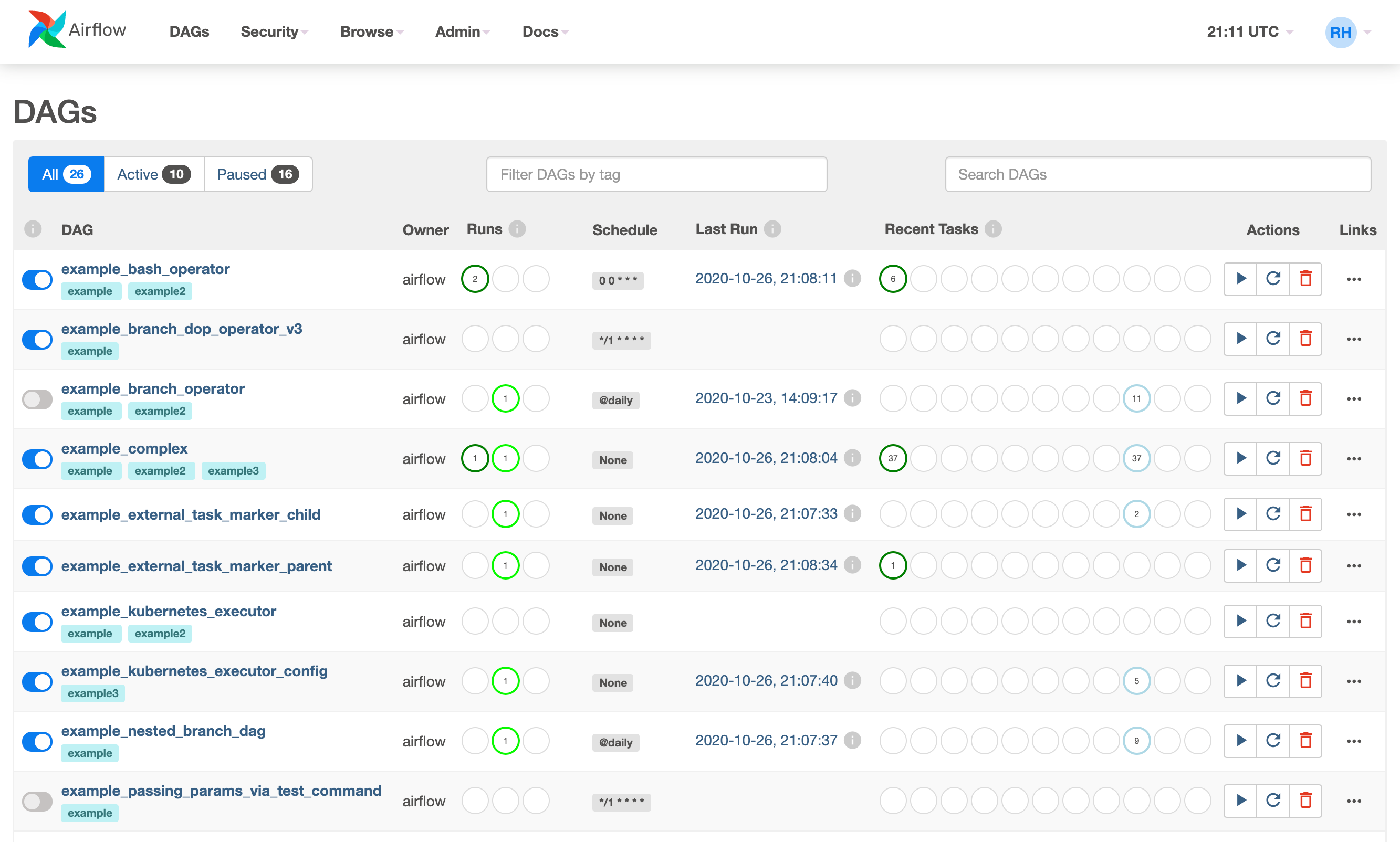

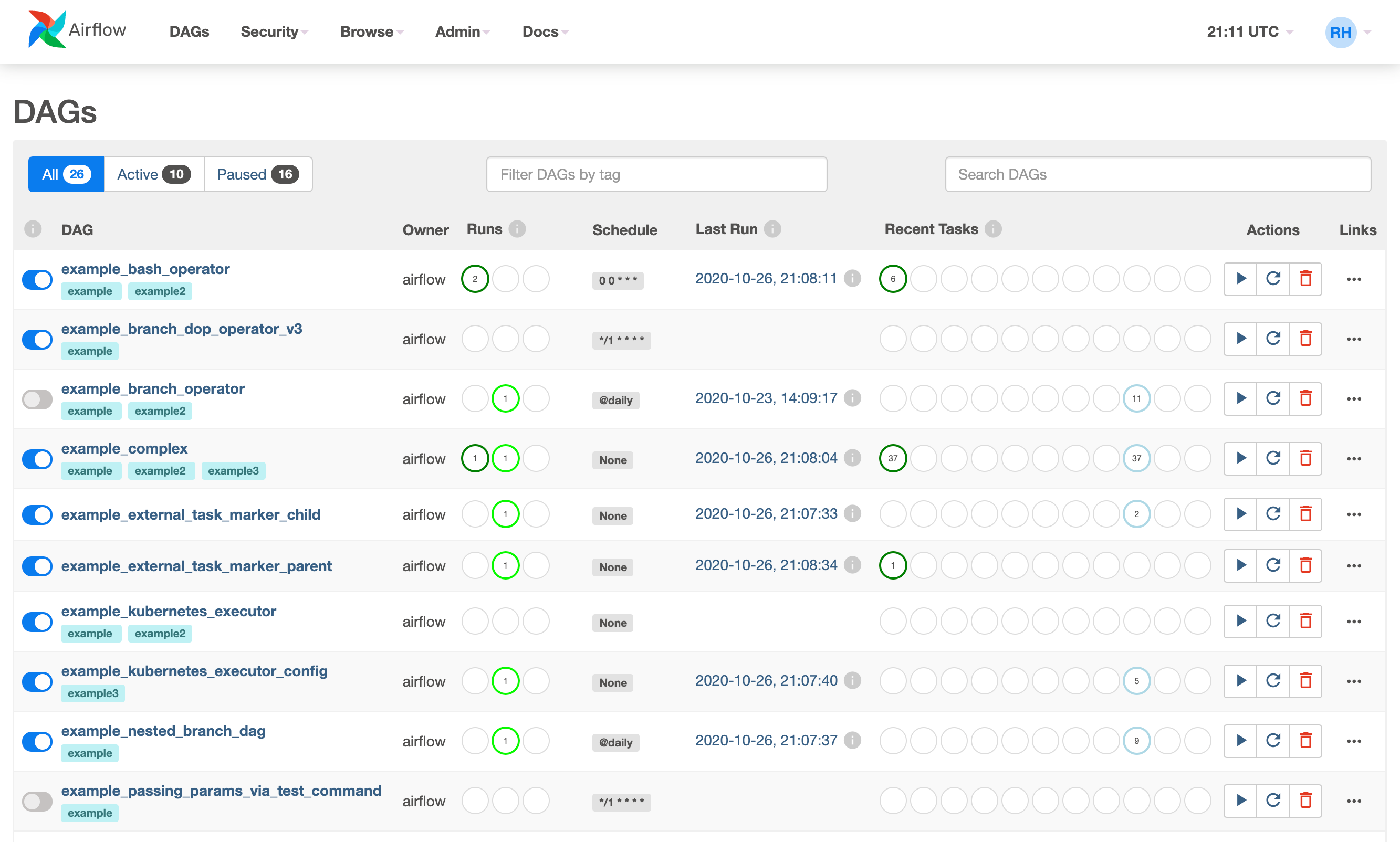

User interface

Airflow comes with a user interface that lets you see what DAGs and their tasks are doing, trigger runs of DAGs, view logs, and do some limited debugging and resolution of problems with your DAGs.

It's generally the best way to see the status of your Airflow installation as a whole, as well as diving into individual DAGs to see their layout, the status of each task, and the logs from each task.

What makes Airflow great?

- Can handle upstream/downstream dependencies gracefully (Example: upstream missing tables)

- Easy to reprocess historical jobs by date, or re-run for specific interval

- Handel errors and failures gracefully. Automatically retry when a task fails

- Easy to deployment of workflow changes

- Integrations with a lot of infrastructure(AWS, Google Cloud, Hive, Druid, etc.)

- Job testing through airflow itself

- Accessibility of log files and other meta-data through the web UI

- Monitoring all jobs status in real-time + Email alerts

Related Links:

Airflow tutorial 1: Introduction to Apache Airflow

28 August: Amit Joshi

The goal of this blog is to answer these two questions

- What is Airflow

- Use case&why do we need Airflow?

What is Airflow

Airflow is a platform to programmatically author, schedule and monitor workflows and data pipelines.

Use Airflow to author workflows as Directed Acyclic Graphs (DAGs) of tasks. The Airflow scheduler executes your tasks on an array of workers while following the specified dependencies. Rich command line utilities make performing complex surgeries on DAGs a snap. The rich user interface makes it easy to visualize pipelines running in production, monitor progress, and troubleshoot issues when needed.

When workflows are defined as code, they become more maintainable, versionable, testable, and collaborative.

A typical workflow

- Download data from source

- Send data somewhere else to process

- Monitor when the process is completed

- Get the result and generate the result

- Send the report out by email

Airflow Architecture

Airflow is a platform that lets you build and run workflows. A workflow is represented as a DAG (a Directed Acyclic Graph), and contains individual pieces of work called Tasks, arranged with dependencies and data flows taken into account.

A DAG specifies the dependencies between Tasks, and the order in which to execute them and run retries; the Tasks themselves describe what to do, be it fetching data, running analysis, triggering other systems, or more.

An Airflow installation generally consists of the following components:

- A scheduler, which handles both triggering scheduled workflows, and submitting Tasks to the executor to run.

- An executor, which handles running tasks. In the default Airflow installation, this runs everything inside the scheduler, but most production-suitable executors actually push task execution out to workers.

- A webserver, which presents a handy user interface to inspect, trigger and debug the behaviour of DAGs and tasks.

- A folder of DAG files, read by the scheduler and executor (and any workers the executor has)

- A metadata database, used by the scheduler, executor and webserver to store state.

Airflow DAG

- A workflow is a Directed Acyclic Graph(DAG) with multiple tasks which can be executed independently.

- Airflow DAGs are composed of Task.

Control Flow

DAGs are designed to be run many times, and multiple runs of them can happen in parallel. DAGs are parameterized, always including a date they are "running for" (the execution_date), but with other optional parameters as well.

Tasks have dependencies declared on each other. You'll see this in a DAG either using the >> and << operators:

first_task >> [second_task, third_task] third_task << fourth_task

Or, with the set_upstream and set_downstream methods:

first_task.set_downstream([second_task, third_task]) third_task.set_upstream(fourth_task)

User interface

Airflow comes with a user interface that lets you see what DAGs and their tasks are doing, trigger runs of DAGs, view logs, and do some limited debugging and resolution of problems with your DAGs.

It's generally the best way to see the status of your Airflow installation as a whole, as well as diving into individual DAGs to see their layout, the status of each task, and the logs from each task.

What makes Airflow great?

- Can handle upstream/downstream dependencies gracefully (Example: upstream missing tables)

- Easy to reprocess historical jobs by date, or re-run for specific interval

- Handel errors and failures gracefully. Automatically retry when a task fails

- Easy to deployment of workflow changes

- Integrations with a lot of infrastructure(AWS, Google Cloud, Hive, Druid, etc.)

- Job testing through airflow itself

- Accessibility of log files and other meta-data through the web UI

- Monitoring all jobs status in real-time + Email alerts